Artificial intelligence stands as one of the most disruptive and transformative forces in human history. With the power to revolutionize fields such as medicine, climate science, energy storage and process automation, AI’s potential so far has shown no limit.

The same velocity that fuels innovation also magnifies it’s risk. AI systems can amplify bias, hallucinate falsehoods, or generate synthetic media indistinguishable from reality.

The growth AI of has compressed decades of progress into a handful of years. New use-cases and techniques can go from lab paper to global deployment in a single quarter. Technology advances faster than governments can legislate, creating a regulatory lag.

While executives from companies like Google, Microsoft, and OpenAI are often invited to share expertise, helping to lead conversation on ethical and technical aspects, they recognize governments are not able to move sufficiently fast enough. Absent any federal guardrails, internal efforts to police themselves were made by creating ethics frameworks and internal governance teams such as ethics boards and committees, assuaging public concerns.

As AI systems become more autonomous and integrate deeper into our daily lives, calls for safety and transparency are demanded in the public domain. Corporations on the other hand, seek to mitigate liability, staying ahead of regulatory bodies through self-regulatory practices.

GDPR was the world’s first comprehensive data privacy and protection regulation, creating the enforcement and penalty framework for EU’s AI regulatory legislation, called the AI Act.

Marketing Technology News: MarTech Interview with Michael McNeal, VP of Product at SALESmanago

At the most basic level, the AI Act seeks to verify AI Systems:

- Are not used to break any laws

- Collect and use data legally and ethically

- Do not discriminate a group or individual

- Do not manipulate or deceive in any way

- Do not invade on an individual’s privacy or cause harm

- Are employed responsibly

- Can be created in sandboxes, bypassing regulation, while promoting innovation

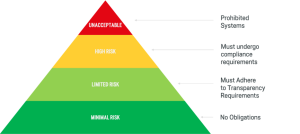

The AI Act uses a risk-based approach to regulate AI systems. This framework categorizes AI systems into the below four distinct risk levels, with proportional obligations and restrictions, defined here in more detail.

While GDPR and the AI Act share similar enforcement and penalty structures, their oversight models differ. GDPR is enforced locally by each member state’s Data Protection Authority (DPA), as opposed to each member-state Market Surveillance Authorities (MSA) enforces the AI Act, overseen centrally by the European Commission’s AI Office to maintain consistency across the EU. Both GDPR and the AI Act use a tier penalty model that increase fines for more egregious or repeated offenses, with varying percentages between the two legislations.

The United States, on the other hand, has since veered sharply from Europe’s unified structure with a new administration. President Biden’s 2023 Executive order 14110 balancing innovation and safe AI practices, promoting coordination across federal agencies.

At the start his 2025 term, one of President Trump’s first Executive Orders revoked Biden’s EO 14110, reversing, softening or simply renaming several oversight initiatives. For example, the AI Safety Institute dropping “Safety” from its name signaling a shift toward deregulation and speed.

Absent any federal legislation, individual states have begun drafting their own AI regulation, creating a disparate regulatory environment. Each state may use different definitions, enforcement mechanisms, and risk thresholds.

Private companies operating nationally are finding themselves in a maze of nuanced overlapping obligations. Because state-level laws can be aggressive, the risk for non-compliance increases even in a federally permissive administration.

Globally, AI regulation resembles a patchwork of legislation, using EU’s model as a comprehensive guide, converging on the ethos of the EU AI Act: transparency obligations, high-impact systems, and human oversight. These terms have become the lingua franca for companies and governments worldwide.

It’s the same pattern for GDPR. Europe wrote the detailed rules, and the rest of the world gravitated towards them, for lack of a cleaner alternative.

Recommendations for Responsible Adoption

1. Collaborate with industry bodies:

Engage with groups like the IAB and standards committees defining AI guidance.

2. Bring legal in early:

Translate emerging obligations into contracts, products, and data policies.

3. Audit training data:

Vet datasets for legality, bias, and provenance before they reach production.

4. Maintain human oversight:

Keep escalation paths for critical decisions and monitor models for drift.

5. Keep detailed decision logs:

Audit trails protect against both regulatory and reputational fallout.

6. Test for bias:

Treat fairness like any other QA process.

7. Build for change:

Design modular systems that can adapt as laws evolve.

8. Assess your markets:

Align AI practices with regional expectations.

9. Vet your vendors:

Accountability extends down the supply chain.

10. Use compliance tools:

Leverage readiness checkers and risk frameworks to measure exposure early.

While AdTech is not at the epicenter of the AI debate, it’s large scale data pipelines, automated decision systems and regional privacy constraints have exposed governance challenges prior to other industries.

Having dealt with the arm of the law for non-compliance, the lessons learned about transparency, bias, consent, and data ethics echo across every industry where automated decision making occurs.

AI will continue to move quickly. Legislation will try to keep up. It’s a cat and mouse game, where the future of AI governance will not come from a single regulator. It is the responsibility of companies to act with responsible principles. Regulation may lag, but responsibility doesn’t have to.

Marketing Technology News: Complexity as a Cost Center: The Hidden Financial Burden of Fragmented Martech Stacks